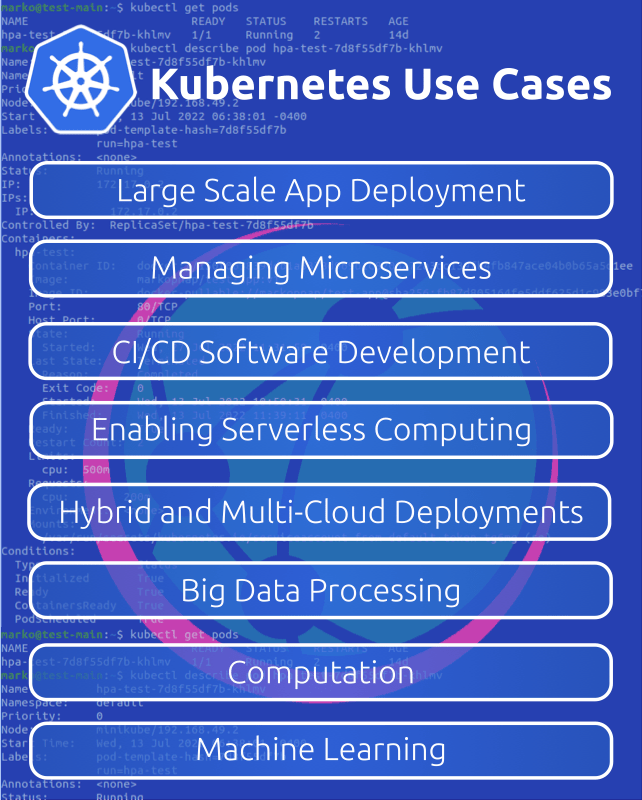

Kubernetes Use Cases

The containers rapidly replace virtual machines as the go-to solution for simplifying and optimizing application deployment. The rise of containerized apps ushered in a new niche of tools - container orchestrators.

The primary goal of container orchestration solutions such as Kubernetes, Docker Swarm, and Amazon ECS is to automate the provisioning and management of containers. However, their use cases go beyond the simple deployment automation.

Large Scale App Deployment

With automation capabilities and a declarative approach to configuration, Kubernetes is designed to handle large apps. The features such as horizontal pod scaling coupled with load balancing allow developers to set up the system with minimal downtimes. Kubernetes ensures everything is up and running even during unpredictable moments in an app's life, such as traffic surges and hardware defects.

One of the challenges large-scale app developers face is efficiently managing the environment, such as IPs, networks, and resources. Platforms, such as Glimpse, adopted Kubernetes to deal with this challenge.

Glimpse uses Kubernetes with cloud-based services such as Kube Prometheus Stack, Tiller, and EFK Stack (Elasticsearch, Fluentd, and Kibana) to organize cluster monitoring.

Managing Microservices

Most applications today utilize microservice architecture to simplify and speed up code management. Microservices are apps within apps, services with separate functions that can communicate with each other.

Microservice-to-microservice communication mechanisms present one of the more frequent challenges for developers who decide to adopt this architecture. Kubernetes is often the best solution for managing communication between application components. It can control the component behavior in case of a failure, facilitate the authentication process, and distribute resources across microservices.

CI/CD Software Development

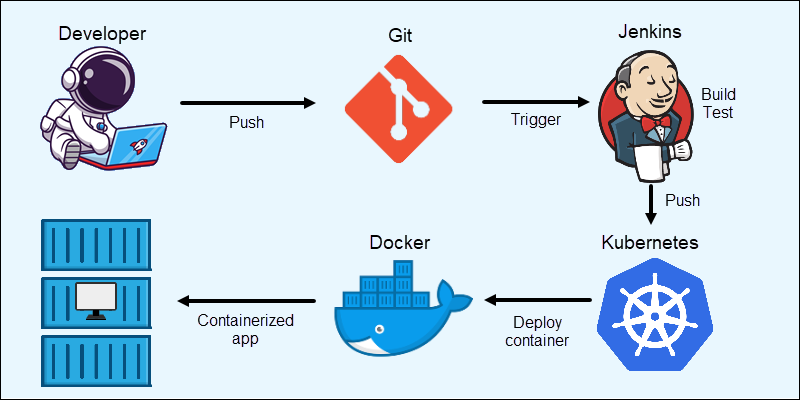

CI/CD (Continuous Integration - Continuous Delivery/Deployment) is a set of automation practices for software building, testing, and deployment. The CI/CD pipelines are based on the notions of automation and speed of the entire development process.

Many DevOps tools participate in the workflow of CI/CD pipelines. Kubernetes is often employed as the container orchestrator of choice, together with the Jenkins automation server, Docker, and other tools.

Setting up a Kubernetes CI/CD workflow ensures that the pipeline can leverage the platform's capabilities, such as automation and efficient resource management.

Below is an example of a typical CI/CD pipeline for Kubernetes cluster deployment:

Enabling Serverless Computing

Serverless computing refers to a cloud-native model in which backend server-related services are abstracted from the development process. The cloud providers take control of the server provisioning and maintenance, and developers design and containerize the app.

While all major cloud providers offer serverless models, Kubernetes allows the creation of an independent serverless platform that provides greater control of the backend processes. Creating a Kubernetes-driven serverless environment enables developers to focus on the product while retaining control over the infrastructure.

Hybrid and Multi-Cloud Deployments

Hybrid and Multi-Cloud environments are infrastructure models that integrate more than one cloud solution in the development process. Companies can avoid vendor lock-ins, increase flexibility, and improve app performance by using more than one cloud.

Kubernetes in hybrid and multi-cloud environments helps developers achieve application portability. Its environment-agnostic approach eliminates the need for platform-specific app dependencies.

Kubernetes concepts, such as services, ingress controllers, and volumes, allow abstraction from the underlying infrastructure. Furthermore, built-in auto-healing and fault tolerance make Kubernetes a great solution to the issue of scaling in a multi-cloud environment.

Big Data Processing

The companies that deal with big data, particularly those that operate in the cloud, frequently decide to employ Kubernetes to support their software.

The primary reasons for the adoption are:

Ensuring the portability of the software, which is usually scattered across multiple environments.

Packaging big data apps to ensure repeatability. Complex systems such as Spark, Hadoop, and Cassandra require strict component compatibility.

Computation

Multiple large-scale scientific compute facilities use Kubernetes to replace traditional batch systems such as SLURM and HTCondor. Using Kubernetes, scientists are able to deploy analytical tools and pipelines without requiring IT experts. The orchestration platform also takes care of resource management by scaling the resources allocated to pipelines and helps make the entire system more resilient.

One of the most prominent laboratories in the world, CERN in Switzerland, uses Kubernetes for its built-in monitoring and logging capabilities. Introducing container orchestration into their workflow allowed CERN scientists to significantly improve the speed of adding new cluster nodes and autoscaling replicas for system controllers. Eliminating most virtual machines also lowered the virtualization overhead by 15%.

Machine Learning

Deploying machine learning models on Kubernetes allows users to run their entire machine learning workflow in one place. Kubernetes enables running machine learning workflows both locally or in the cloud. This feature improves flexibility and efficiency.

Use Kubernetes to improve machine learning processes by:

Scaling the available resources (e.g., GPU) to fit the need of the model.

Enabling gradual upgrades of stages and nodes to lower downtime.

Automating many aspects of the workflow, such as health checks and resource and container management.

Leveraging the portability of the system

Conclusion

The article listed and explained the most important use cases for the Kubernetes container orchestration platform.

Learn more about the benefits of container orchestration tools by reading a guide on how container orchestration works.